RLX COMPONENTS s.r.o. , Electronic Components Distributor.

RLX COMPONENTS s.r.o. , Electronic Components Distributor.

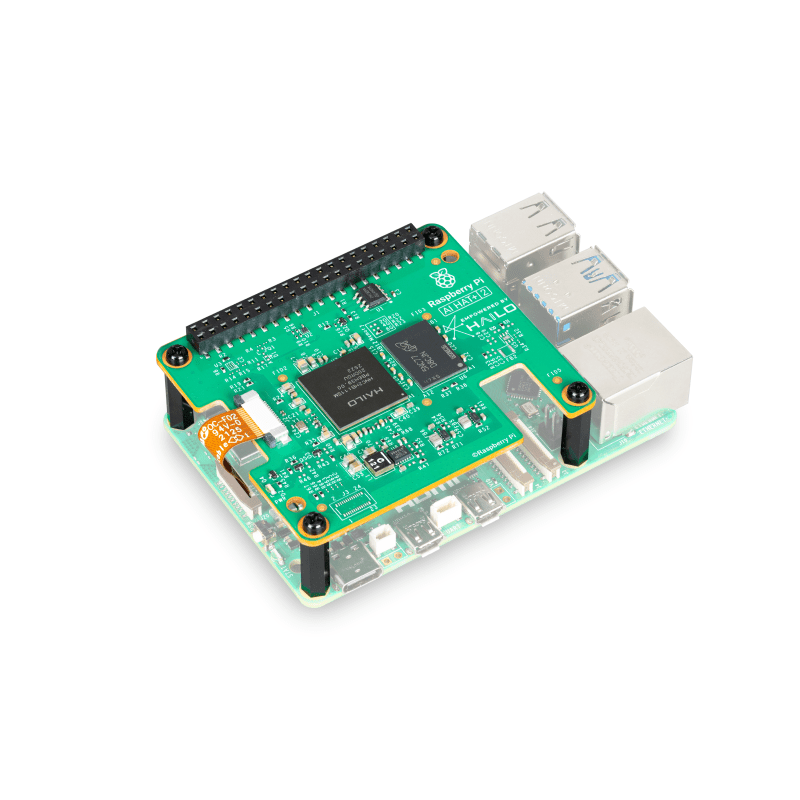

Raspberry Pi AI HAT+ 2 for Raspberry Pi 5 (SC2166) Hailo-10H, 40TOPS (INT4)

Vďaka novému neurónovému sieťovému akcelerátoru Hailo-10H poskytuje Raspberry Pi AI HAT+ 2 výkon 40 TOPS (INT4) pri inferencii, čím zabezpečuje plynulý chod generatívnych úloh umelej inteligencie na Raspberry Pi 5. AI HAT+ 2 vykonáva všetky AI spracovania lokálne a bez sieťového pripojenia, pracuje spoľahlivo a s nízkou latenciou, pričom zachováva súkromie, bezpečnosť a nákladovú efektívnosť cloudového AI výpočtu, ktorý sme zaviedli s pôvodným AI HAT+. Na rozdiel od svojho predchodcu, AI HAT+ 2 disponuje 8 GB vyhradenej integrovanej pamäte RAM, čo umožňuje akcelerátoru efektívne spracovávať oveľa väčšie modely, ako bolo doteraz možné. To spolu s aktualizovanou hardvérovou architektúrou umožňuje čipu Hailo-10H akcelerovať veľké jazykové modely (LLM), modely videnia a jazyka (VLM) a iné generatívne aplikácie umelej inteligencie. V prípade modelov založených na videní – ako je rozpoznávanie objektov založené na Yolo, odhad polohy a segmentácia scény – je výkon počítačového videnia AI HAT+ 2 vďaka integrovanej pamäti RAM vo veľkej miere ekvivalentný výkonu jeho predchodcu s 26 TOPS. Ďalej ťaží z rovnakej úzkej integrácie s našim softvérovým balíkom pre kamery (libcamera, rpicam-apps a Picamera2) ako pôvodný AI HAT+. Pre používateľov, ktorí už pracujú so softvérom AI HAT+, je prechod na AI HAT+ 2 väčšinou plynulý a transparentný.

Official Raspberry Pi AI HAT+ 2, Built-in Hailo-10H AI Accelerator and 8GB dedicated onboard RAM, brings generative AI capability to Raspberry Pi 5

High-performance AI HAT Designed By Raspberry Pi

Built-in Hailo-10H AI Accelerator and 8GB dedicated onboard RAM,

brings generative AI capability to Raspberry Pi 5

| Model | Raspberry Pi AI HAT+ | Raspberry Pi AI HAT+ 2 |

|---|---|---|

| Accelerator chip (Hailo NPU) | Hailo-8L (13 TOPS, INT8) / Hailo-8 (26 TOPS, INT8) |

Hailo-10H (40 TOPS, INT4) |

| Memory (RAM) | Uses the memory on a Raspberry Pi 5 | Has its own 8 GB onboard memory, allowing it to run LLMs and VLMs up to ~6 billion parameters |

| Large Language Model (LLM) support | Not supported | Supported |

| Vision-Language Model (VLM) support | Not supported | Supported |

| Use cases | Object detection, camera post-processing, robotics, moderate neural workloads | Everything available on AI HAT+ plus generative AI workloads, including local LLMs and VLMs |

The Raspberry Pi AI HAT+ 2 is an add-on board based on the Hailo-10H AI accelerator that brings generative AI capability to Raspberry Pi 5. With 8GB of dedicated on-board RAM, the AI HAT+ 2 is ideal for running large language models (LLMs) and vision-language models (VLMs) locally, leaving the host Raspberry Pi 5 free to handle other tasks. The AI HAT+ 2 delivers reliable, low-latency, accelerated generative AI at the edge, making it the perfect choice for applications including offline process control, secure data analysis, facilities management, and robotics.

The AI HAT+ 2 delivers 40 tera-operations per second (TOPS) of INT4 inferencing performance, with computer vision performance equivalent or superior to the 26 TOPS Raspberry Pi AI HAT+. A set of sample models is provided by Hailo; users can also train custom vision models or fine-tune generative AI models using Low-Rank Adaptation (LoRA) to suit their application, such as speech to text, translation, or visual scene analysis.

Like the original Raspberry Pi AI HAT+, the AI HAT+ 2 communicates using Raspberry Pi 5’s PCI Express interface. When the host Raspberry Pi 5 is running an up-to-date Raspberry Pi OS image, it automatically detects the on-board Hailo accelerator and makes it available for AI computing tasks. The built-in rpicam-apps camera applications in Raspberry Pi OS natively support the AI module, automatically using the accelerator to run compatible post-processing tasks.

Key features include:

With 8GB of dedicated on-board RAM, the AI HAT+ 2 is ideal for running large language models (LLMs) and vision-language models (VLMs) locally, leaving the host Raspberry Pi 5 free to handle other tasks and ensuring your AI models run smoothly.

Delivering reliable, low-latency AI processing at the edge and without a network connection, the AI HAT+ 2 simplifies data security, minimises infrastructure requirements, and reduces cloud API costs.

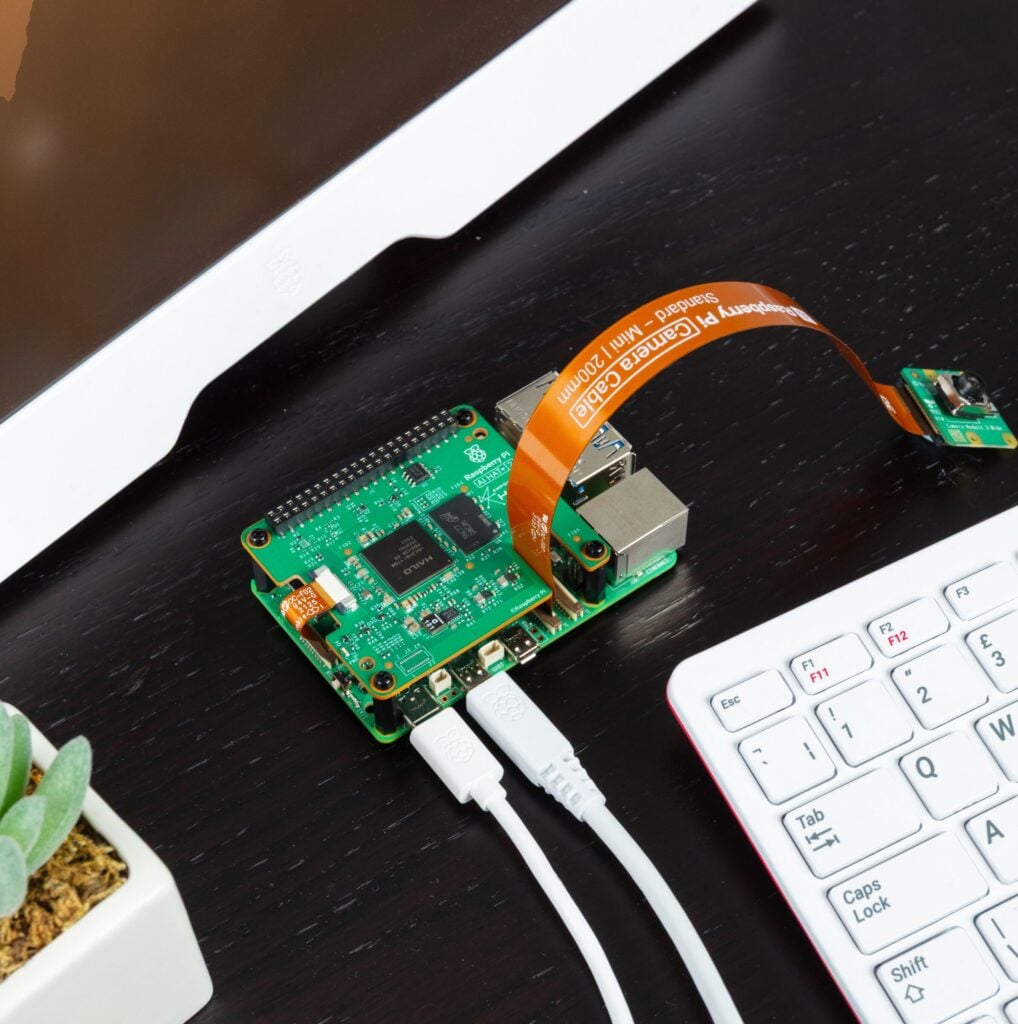

* for reference only, the Raspberry Pi 5 and Raspberry Pi 5 Official Active Cooler

are NOT included.

* for reference only, the Raspberry Pi 5 and Raspberry Pi 5 Official Active Cooler

are NOT included.

Raspberry Pi AI HAT+ 2

Heatsink

The following LLMs will be available to install at launch:

| Model | Parameters/size |

| DeepSeek-R1-Distill | 1.5 billion |

| Llama3.2 | 1 billion |

| Qwen2.5-Coder | 1.5 billion |

| Qwen2.5-Instruct | 1.5 billion |

| Qwen2 | 1.5 billion |

More (and larger) models are being readied for updates, and should be available to install soon after launch.

Let’s take a quick look at some of these models in action. The following examples use the hailo-ollama LLM backend (available in Hailo’s Developer Zone) and the Open WebUI frontend, providing a familiar chat interface via a browser. All of these examples are running entirely locally on a Raspberry Pi AI HAT+ 2 connected to a Raspberry Pi 5.

The first example uses the Qwen2 model to answer a few simple questions:

The next example uses the Qwen2.5-Coder model to perform a coding task:

This example does some simple French-to-English translation using Qwen2:

The final example shows a VLM describing the scene from a camera stream:

By far the most popular examples of generative AI models are LLMs like ChatGPT and Claude, text-to-image/video models like Stable Diffusion and DALL-E, and, more recently, VLMs that combine the capabilities of vision models and LLMs. Although the examples above showcase the capabilities of the available AI models, one must keep their limitations in mind: cloud-based LLMs from OpenAI, Meta, and Anthropic range from 500 billion to 2 trillion parameters; the edge-based LLMs running on the Raspberry Pi AI HAT+ 2, which are sized to fit into the available on-board RAM, typically run at 1–7 billion parameters. Smaller LLMs like these are not designed to match the knowledge set available to the larger models, but rather to operate within a constrained dataset.

This limitation can be overcome by fine-tuning the AI models for your specific use case. On the original Raspberry Pi AI HAT+, visual models (such as Yolo) can be retrained using image datasets suited to the HAT’s intended application — this is also the case for the Raspberry Pi AI HAT+ 2, and can be done using the Hailo Dataflow Compiler.

Similarly, the AI HAT+ 2 supports Low-Rank Adaptation (LoRA)–based fine-tuning of the language models, enabling efficient, task-specific customisation of pre-trained LLMs while keeping most of the base model parameters frozen. Users can compile adapters for their particular tasks using the Hailo Dataflow Compiler and run the adapted models on the Raspberry Pi AI HAT+ 2.

The Raspberry Pi AI HAT+ 2 is available now at $130. For help setting yours up, check out our AI HAT guide.

Hailo’s GitHub repo provides plenty of examples, demos, and frameworks for vision- and GenAI-based applications, such as VLMs, voice assistants, and speech recognition. You can also find documentation, tutorials, and downloads for the Dataflow Compiler and the hailo-ollama server in Hailo’s Developer Zone.